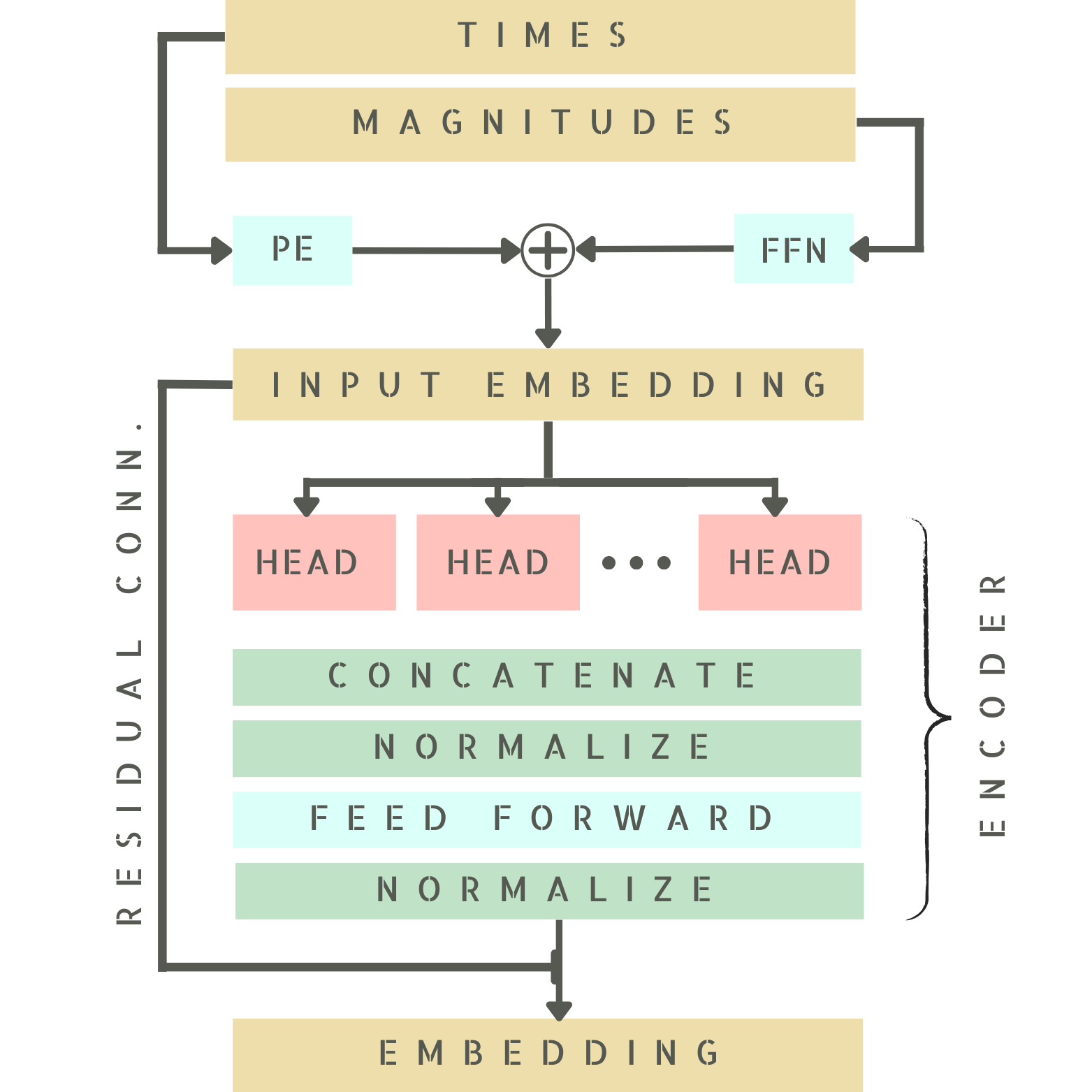

ASTROMER Architecture

ASTROMER's architecture is based on the Transformer architecture proposed in Vaswani (2017), with an encoder-decoder Architecture.

Pre-trained Weights

You can easily download the pre-trained weights of ASTROMER's model:

Contributing to ASTROMER

If you train your model from scratch, you can share your pre-trained weights by submitting a pull request in the weights repository.